Sharing a Meta Quest Screen

How to use a Raspberry Pi to screen cast the output of a Meta Quest 2 Headset.....

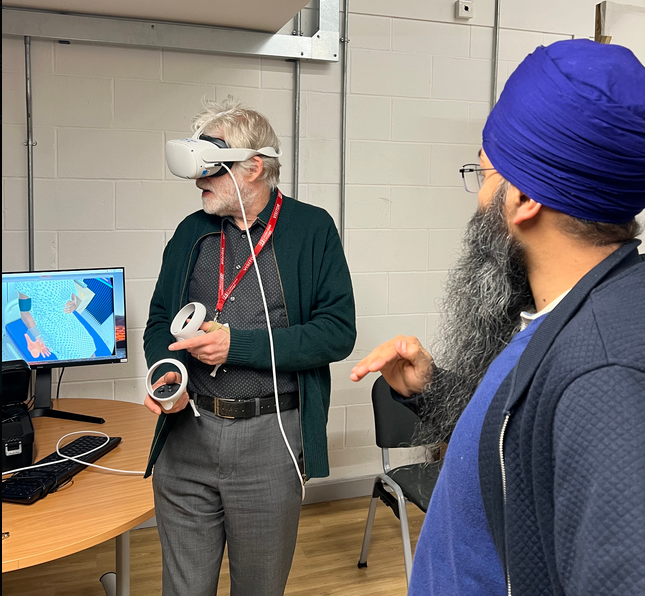

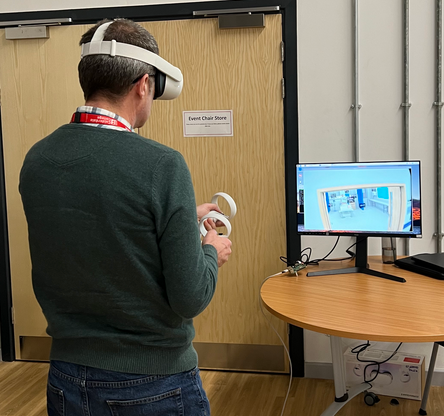

Recently I have been involved with a research project into how Virtual Reality devices, namely the Meta Quest 2, can be used to assess how well users perform within a ward based environment.

The user is presented with a virtual world and are assessed on how well they deal...or not deal...with various issues presented within the environment.

The project has regularly been demonstrated to stakeholders and other research groups and until recently this was achieved using existing equipment along with third party software SideQuest mirror what the user can see onto an external monitor. However shifting priorities meant that this equipment became temporarily unavailable.

Whilst SideQuest was suitable within our lab environment it would not be suitable for general use outside of the lab environment. It could potentially run on staff laptops and learner computers but this would mean another layer of administration being added to what was needed as it would then become another package that would need to be managed by our support unit and be outside of our control.

This presented a good opportunity to find a solution to the problem that could remain in house and be span up as needed...but also be one we could control.

At a basic level the Quest 2 headset can be thought of as an android phone with a fancy built in screen. Having used a tool called scrcpy in the past to repurpose old Android devices as cameras I wondered if this could be a potential solution.

Scrcpy's repository shows that the tool is cross-platform and supports Windows. However it would be unlikely that we could get this installed on our staff laptops due to policy restrictions. What we did have though was one or two Raspberry Pi kicking around.

According to the repository scrcpy is currently at version 2.0 but the version available in Pi's repository was 1.17 so there was a requirement to compile the latest version. A set of instructions was found here and updated to use the version 2.0 of the scrcpy-server as shown below:

sudo apt -y install git wget meson libavformat-dev libsdl2-dev adb

git clone https://github.com/Genymobile/scrcpy.git

cd scrcpy

wget https://github.com/Genymobile/scrcpy/releases/download/v2.0/scrcpy-server-v2.0

meson x --buildtype release --strip -Db_lto=true -Dprebuilt_server=scrcpy-server-v2.0ninja -Cx

sudo ninja -Cx install

This builds two packages, both of which need to be placed into /usr/bin

Once ready this was, optimistically, tested on an Pi Zero with the expected results of the Zero becoming overloaded and very laggy.

It was then tested with a 3B+ and whilst still laggy it was usable but showing the contents of each eye lens in a separate window. After a bit of searching a solution was found here:

scrcpy --crop 1730:974:1934:450 --max-fps 15

With the --max-fps set to 15 performance became a lot better in use although there can be a slight delay of a second or two between what the user is seeing and what is displayed.

Also, as the ADB tools are installed, it's possible to install a APK directly from the Pi onto the headset providing that developer mode is enabled. The headset will also need to be connected to a ADB server...try doing it with scrcpy running.

adb install -r <path to .apk>

Info about the Project:

https://www.ncfe.org.uk/help-shape-the-future-of-learning-and-assessment/aif-pilots/calderdale-college/

https://www.calderdale.ac.uk/calderdale-college-first-to-deliver-virtual-reality-social-care-curriculum/

https://twitter.com/CalderdaleCol/status/1600134106297139200

https://twitter.com/CalderdaleCol/status/1643272446345203713/photo/3